Your primary gaming desktop gpu will be best bet for running models. First check your card for exact information more vram the better. Nvidia is preferred but AMD cards work.

First you can play with llamafiles to just get started no fuss no muss download them and follow the quickstart to run as app.

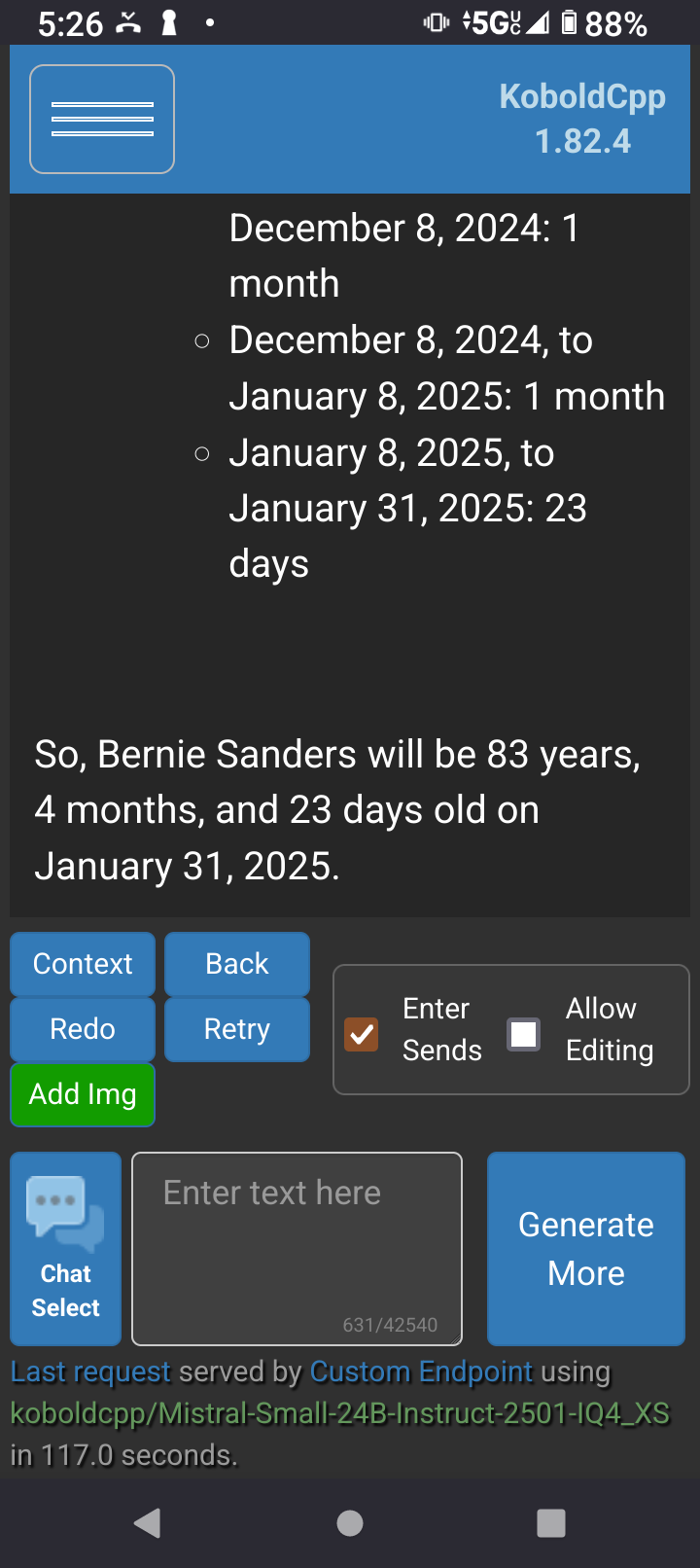

Once you get it running learn the ropes a little and want some more like better performance or latest models then you can spend some time installing and running kobold.cpp with cublas for nvidia or vulcan for amd to offload layers onto the GPU.

If you have linux you can boot into CLI environment to save some vram.

Connect with program using your phone pi or other PC through local IP and open port.

In theory you can use all your devices in distributed interfacing like exo.

Dont give up you got this! I had luck with using vulkan in kobold.cpp as a substitute for rocm with my amd rx 580 card.